Helen Toner is director of strategy and foundational research grants at Center for Security and Emerging Technology, Georgetown University’s in-house technology policy think tank. A policy researcher by trade who spent a year in China learning about the country’s AI ecosystem, she has also become a leading advocate for AI regulation, most recently testifying before Congress on the issue. Between 2021 and 2023, Toner was also a member of the board of OpenAI, where she was one of four members who voted to remove Sam Altman as CEO last November.

In this lightly edited Q&A, we discussed the U.S.-China AI race and who is ahead, whether leading companies are doing enough to address the risks posed by AI, and the prospects of AI safety cooperation between the two superpowers.

Illustration by Lauren Crow

Q: How did you get started studying China’s AI policies?

A: I first got interested in AI and AI policy around 2014. One of the areas that seemed especially interesting to me was the national security and foreign relations side of AI policy. In 2016, I was working at Open Philanthropy in San Francisco [a research foundation that issues grants based on the principles of effective altruism], when they were scaling up their work on AI. As I got deeper into researching AI and national security, it quickly became clear to me that the next word out of anyone’s mouth after “AI” and “national security” was going to be “China,” but there was barely anyone who knew much about all three. I’ve always loved learning languages, and I had already started teaching myself Chinese on the side. So I figured that if I could actually go deeper on China I could partially fill that gap. That’s how I decided to spend most of 2018 in Beijing learning Chinese and getting to know the AI sector.

Probably the most interesting opportunity I had in China was to go to a small, invite-only conference at the National University of Defense Technology in Changsha on national security and AI, which had about 50 or 60 attendees including less than 10 foreigners. The sentiment was very enthusiastic about using AI in defense and national security applications. This was prior to the big 2022 shift, when ChatGPT came out, and suddenly everyone was talking about language models and general purpose models; but it was a couple years after DeepMind beat the world champion at Go, so the conversation was focused on reinforcement learning and gameplay.

South Korean professional Go player Lee Sedol participates in a best-of-five-game competition of Go against Google DeepMind’s AI program, AlphaGo, March, 2016. Credit: Google DeepMind via YouTube

How would you compare the trajectories of AI development in the U.S. and China since then?

| BIO AT A GLANCE | |

|---|---|

| AGE | 32 |

| BIRTHPLACE | Melbourne, Victoria, Australia |

| CURRENT POSITION | Director of Strategy and Foundational Research Grants at Georgetown University’s Center for Security and Emerging Technology |

There are some similarities. In both places, the level of interest in and enthusiasm for generative AI has outstripped its practical usefulness — at least for now. There’s been a big wave of investment in startups that are planning to use generative AI to do something. I think the expectations that investors have of making big money pretty fast are probably not going to be met. At the same time, some investors understand the underlying trend of AI systems getting more advanced and having lots of potential economic value if you can figure out how to implement them. That partially explains why on both the U.S. and the Chinese side, you’re seeing leadership from big companies that are able to devote large amounts of money to R&D without necessarily expecting a quick profit.

On both sides, there are also a small number of up-and-comers who have really great talent that are able to challenge the big players. On the Chinese side, DeepSeek comes to mind as one of the top players that seemed to come out of nowhere. On the U.S. side, you have companies like OpenAI or Anthropic that also came out of nowhere, unless you were really paying attention to those top researchers.

On the Chinese side, there’s a little bit more involvement from academia, or academia-government partnerships, as opposed to the U.S. side where you have the real dominance of large Silicon Valley-based tech companies. We’ll see how that changes. A lot of people are expecting a big bubble to burst. I tend to think we’ll see more like a mini-bubble bursting, similar to the dotcom bubble in the sense that the level of excitement about the Internet and the investment in it got ahead [of its actual capabilities], even though the underlying technological improvements were real and important. We might see a similar recalibration, and questions about what kinds of companies, products, and expectations we want from AI on both sides of the Pacific.

When you say the level of investment is currently outstripping practical usefulness, is that because the technology hasn’t caught up, or because of, say, a lack of imagination about what practical applications the technology could have?

It’s a technology and an implementation question. If you look at the history of big technologies, such as electricity or the Internet, the gap between something being invented and something being really rolled out widely and integrated can be decades. That’s partly because implementation takes time to figure out, but partly also because it takes time to make sense of and get the most out of any technology.

There are also technological limitations: the current generation of generative AI tends to be unreliable in ways that people are now familiar with, such as hallucinations or confabulations where they’ll just make things up. But they’re also unreliable in other ways and pretty easy to hack or get to do things they’re not supposed to do, and that limits how useful they can be in settings where you need to get the right answer. That’s a limitation that means you can’t just mass roll it out into everything. As the technology advances, I expect some of those reliability issues to get better.

What do you see as the biggest risks to do with AI that call for regulation right now?

The biggest risk is that we get caught unaware by future progress in AI technology. The challenge here is that there’s huge disagreement between experts on what we should expect, compounded by the fact that the experts don’t have a good track record of predicting where AI is going to go next. Some very serious thinkers and extremely well capitalized companies believe we may develop very powerful and sophisticated AI systems within three to five years, systems that would be world changing and could be extremely dangerous. Other experts think that’s total fantasy. That is a challenging situation for policymakers who like to work from the place of expert consensus and then figure out how to handle the problems that experts agree exist.

The policy approach we need is one that is designed to manage that uncertainty and disagreement. Concretely, this means things like better testing and better measurement science for AI. Right now, on anything you would want to know about an AI system, we probably don’t have good ways to measure it. For example, you have many companies and nonprofits and universities developing different large language models, a la ChatGPT. If you follow the space, you start to notice that every time a company announces a new model, they will say that it’s the best, and then give you some metrics that show that. And then the next company will come along and say no, ours is the best. And then they’ll give you a slightly different set of metrics. Even on things like how good is this model at thinking through new problems? Or how good is this model at writing, or, how good is this model at helping me navigate tricky social situations? We don’t have any good ways of measuring that.

A lot of U.S. perceptions of China are very vibes-based, including a sense that China just lets their tech sector rip. Obviously, if you pay attention to actual Chinese politics and policy, that’s not true.

Then, if you’re looking towards the future: are we actually on track to build systems that are comparably smart to humans in important ways? Experts have huge disagreements online and in academic papers and commentaries about things like: do AI systems understand or can they reason about the world? They sound like simple things because they’re everyday words that we use casually when it comes to humans. But when you start to ask if an AI system is working its way through a math problem step by step, is that reasoning? Maybe it had math problems in its training data that were very similar. Maybe it’s just copy and pasting. If the math problems in its training data set were only sort of similar, then does it count? It’s all very ad hoc, the way that we test them, and also the way that we interpret the results.

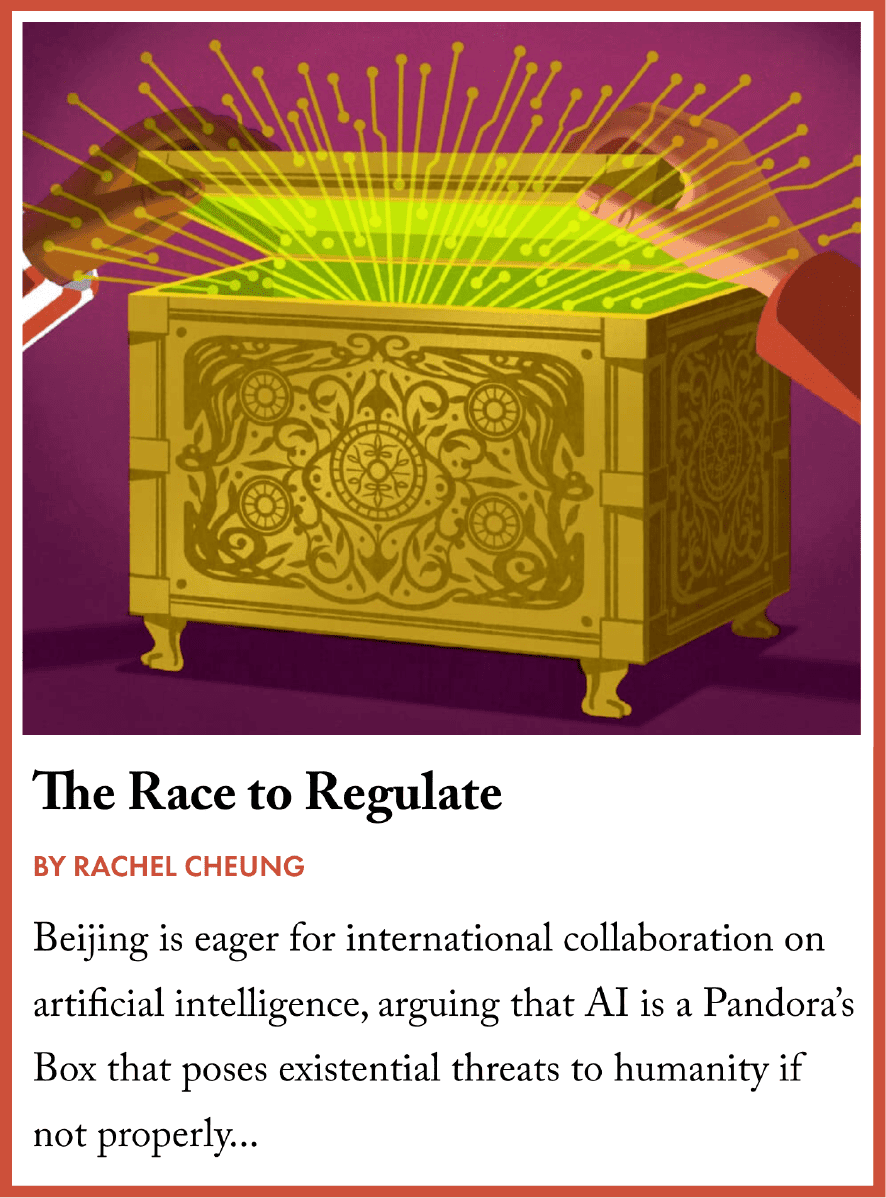

We also need policies related to transparency and disclosure from AI companies and what they’re doing. So we’re not saying: “here’s a very specific thing that you have to do or that you’re not allowed to do,” but rather “you have to tell us what you’re doing. You have to tell us what kind of things you’re building, why you think they’re safe, what kind of risks you’re seeing.”

We also need policies for tracking real world incidents. So when an AI system does cause harm in the real world, how do we make sure that we know that that happened, why it happened, and try and prevent it from happening next time? It’s the kind of thing that we do with aviation. If there’s a plane crash, we have pretty good infrastructure in place to keep track and investigate. A lot of the policies that are most needed right now are about helping us see what’s coming and figure out how to adapt to it, rather than taking a stand on particular issues or ways of fixing them.

Then there’s the question of what kind of risks AI systems might pose. If you have a system that can code — and a lot of language models are good at coding — that helps software developers with their jobs. But at some point that system is pretty likely to also be good at hacking as well. When do we get worried about that? What level of being good at hacking is worrying, and how do we measure how good it is at hacking? We don’t have good ways of doing that. We have lots of different ad hoc approaches that people use, but it’s all very nascent and squishy so far.

A big challenge for implementing a better system for tracking AI incidents is to figure out what counts. For example, self-driving cars crashing: I think that’s a good thing to keep track of, and in lots of places that’s already getting tracked alongside automotive accidents in general. But maybe you need to look at what kind of information is being collected. What kind of investigative powers does the government have to try and figure out what happened there? And what other kinds of harms should be tracked?

| MISCELLANEA | |

|---|---|

| RECENT READ | One book I loved recently was The Dream Machine, by M. Mitchell Waldrop. It’s a history of computing masquerading as a biography of one early computer scientist, J. C. R. Licklider. |

| FAVORITE MUSIC | One genre I think is underrated is Renaissance choral music. Le Poème Harmonique is a French group with some gorgeous recordings of this style. |

A major problem that has happened in the real world, unfortunately, is using AI systems that are technically very basic to determine who gets benefits, or to detect benefits fraud. There have been a few major cases where algorithmic systems have had massive false positive rates, accusing people of committing welfare fraud when they’re not. That’s the kind of thing that can ruin someone’s life pretty quickly, and right now, all we have is information from media reports. These incidents are not being tracked in any kind of systematic way.

What does the state of AI regulation look like in Washington? Do you think Congress will, or can, pass legislation to regulate the development of AI?

I’m of two minds. AI is the kind of challenge that needs sustained attention rather than quick fixes. So I’m encouraged by the fact that Congress, last year, showed itself to be willing to really invest in learning about the technology and not just say “let’s pass one AI bill and check it off our list and move on.”

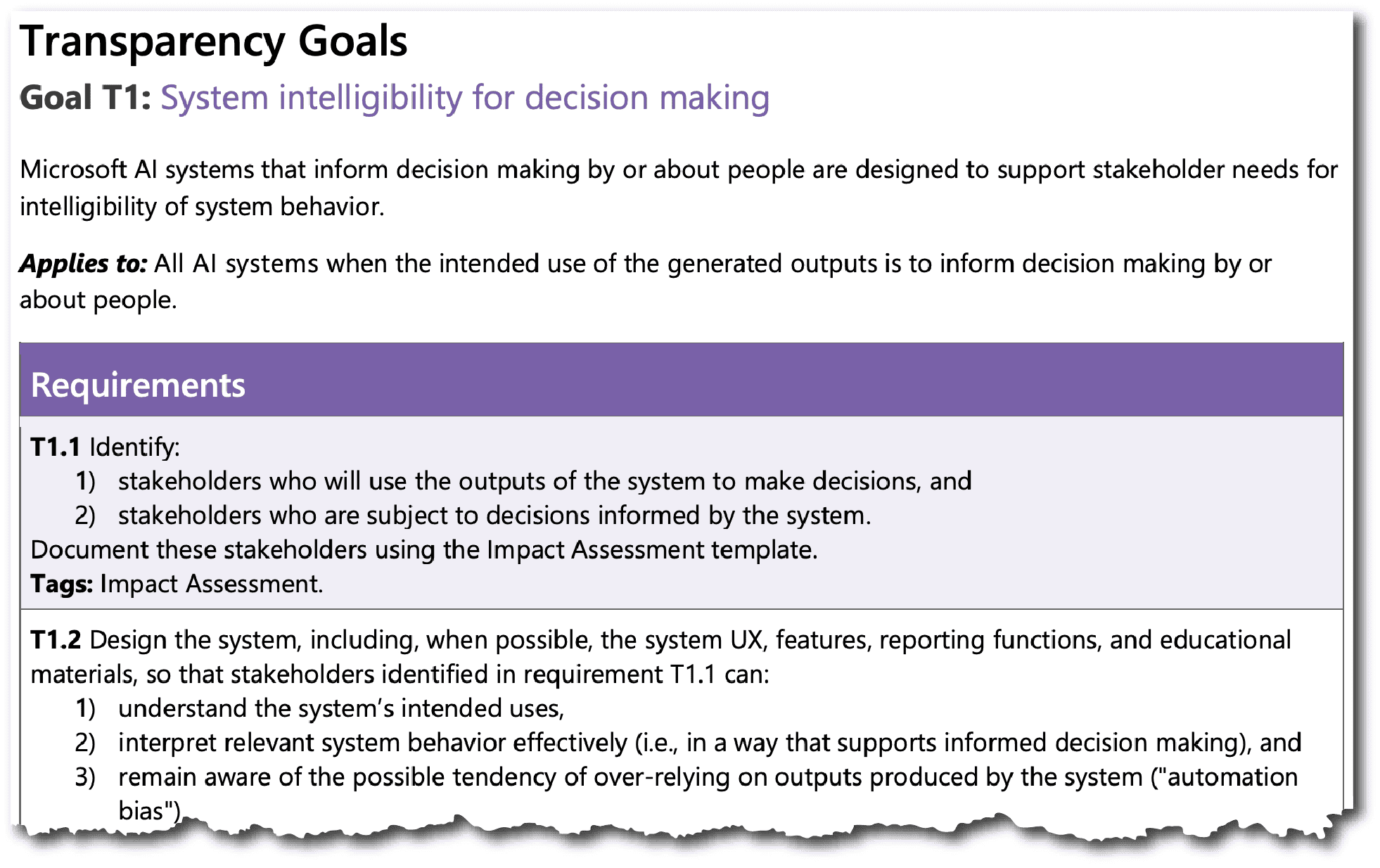

Intelligence Policy in the U.S. Senate’, released by the Bipartisan Senate AI Working Group, May 15, 2024.

On the other hand, I was hoping that we would see more of the energy that was there last year. We had a big push led by [Senate majority leader] Chuck Schumer, who ran nine insight forums that were supposed to be a new and upgraded version of a regular committee hearing. They released a big roadmap [in May] that ended up being relatively vague in a lot of places. On lots of issues, it suggested that committees should consider whether there’s something to legislate here, as opposed to actually moving the ball forward and making progress on questions such as: what kind of legislation might be needed? What are the trade offs? What’s a good balance to strike?

The roadmap also got some criticism for being pretty industry friendly. By far the most detailed, fleshed out places were about ways that the government could fund AI development, which is the kind of government involvement that industry loves most.

I think we will see some AI related provisions in, for example, the [National Defense Authorization Act, Congress’ annual defense spending bill], which is one of the must-pass bills that everyone hangs things onto. In past years, there have been AI provisions there. So I don’t want to say Congress will do nothing on AI, but we seem to be pretty far from any kind of comprehensive action. Of course, a lot will also depend on what the government looks like following the elections and who controls the Senate and the presidency.

Is there a concern that if the U.S. takes a light touch, it’ll effectively outsource regulation to Europe or China, like what’s happened with data privacy to some extent? Or to states such as California with SB1047?

[Note: SB1047 was a controversial state AI safety bill passed by both houses of the California legislature. On September 29th, after this interview took place, it was ultimately vetoed by governor Gavin Newsom.]

It’ll be really interesting to see. Certainly the EU is putting its stake in the ground with the AI Act. The EU loves to talk about the ‘Brussels effect’ of policy that they make and which then spreads elsewhere. I think they’re currently hoping that will happen with the AI Act.

I am fascinated to see what happens with 1047 in California. One of the big objections has been that these kinds of things should be handled at the federal level. I agree in principle, but in practice I don’t see that happening anytime soon. If that bill doesn’t pan out, and if we have an incoming Congress that looks similarly unwilling to do anything significant, I wonder whether California will revisit it, or whether other states will look at what they should be doing. We have seen some legislation already passed. There’s a bill in Colorado and an employment discrimination law in New York. Congress’s lack of action does create a vacuum, and while it may be fine for states to fill some of that vacuum, if it leads to states legislating in ways that contradict each other that’s not really helpful for anyone.

As for China, it is interesting that a defining feature of the U.S.-China relationship is how intertwined the two countries are. But when it comes to AI, they’re not as intertwined. Most U.S. AI providers don’t necessarily want to provide their models in China, where there’s generative AI rules in place. If they’re providing a generative AI service, they probably can’t provide their services in China. And likewise, the Chinese government is pretty happy to block most international providers and impose these regulations so that [Chinese and international AI providers] stay bifurcated. So I don’t necessarily see Chinese regulations as likely to naturally seep over into the U.S. context.

Some big tech executives and investors have opposed efforts to regulate AI, claiming that doing so would cede ground to China. What do you think?

I don’t buy it for a few reasons. For one, China is already regulating very heavily. A lot of U.S. perceptions of China are very vibes-based, including a sense that China just lets their tech sector rip. Obviously, if you pay attention to actual Chinese politics and policy, that’s not true.

If you get in this race to the bottom dynamic, where you feel like you have to keep developing and deploying more advanced AI systems because your competitors are going to be doing the same, that is a really unsafe equilibrium.

Another reason is China is not beating the U.S. right now, and isn’t particularly close to doing so. They’re also facing really significant macro headwinds from the general economy, unemployment, investment levels, and semiconductor controls.

Lastly, one big reason I don’t buy this argument is it can only hold if regulation necessarily slows down innovation, whereas I believe regulation can be neutral, even positive, for innovation in a sector like AI, where questions about reliability and consumer trust are so central. There was a recent study that found that when consumers see AI in the description of a product, it makes them want to use it less, not more. If you look at food safety, for example, regulation can actually bring trust. And that is a really good thing from the perspective of those sectors. Allowing the prospect that China might catch up to lead us to throw out any possibility of regulating AI would be really mistaken.

You were previously on the board of OpenAI, which has been criticized for its approach to AI safety. In May, for example, it dismantled its long-term AI risks team. Are U.S. AI companies taking risks seriously?

It depends a lot on the people or the company in question. Different experts have very different views on these risks. And then separate from the question of whether people are thinking about the risks is the question of incentives and whether they’ll be able to adequately invest in identifying and mitigating risks. It’s probably not a great idea to leave that in companies’ hands, given the investor pressure they face to make money and to be out in front of competitors.

| MISCELLANEA | |

|---|---|

| FAVORITE FILM | I am anything but a film connoisseur, but I enjoyed Knives Out very much. |

| MOST ADMIRED | Impossible to pick a single person, but Bryan Stephenson of the Equal Justice Initiative comes to mind. He is a black lawyer who fought for the rights of death row inmates in the Deep South — incredibly brave and selfless work. |

Part of the problem is that developing a language model is massively compute heavy and takes enormous amounts of capital to be competitive. You have to raise billions of dollars, and in order to do that you have to look like you’re at the front of the pack. I have a lot of friends working at multiple different AI companies who talk about the enormous time pressure that they face to get things out, to push them to production and be seen as leading. That’s a pretty worrying dynamic with potentially major downsides. At certain points, as AI continues to progress, you need to have a more cautious or coordinated approach, and those kinds of competition condition dynamics are not going to help.

[Note: In late-September, Reuters reported that after years of operating under the control of a non-profit board established with the purpose of ensuring the mission of creating “safe AGI that is broadly beneficial,” OpenAI is considering restructuring its business into a for-profit corporation.]

Do you think OpenAI is taking these risks seriously?

I think I’ve said what I want to say elsewhere about that.

Is the U.S. or China ahead in AI? How do you think about that comparison?

It’s impossible to give a single answer, but that doesn’t mean that it’s impossible to give any answer. If you’re focused on the most advanced general purpose models, the U.S. is ahead by something like one to two-and-a-half years. If you look at AI for surveillance, China is clearly ahead. It’s not hard to explain why. You know the U.S. has had, at best, a yellow light in terms of public perception and policy approach [to surveillance] whereas, China’s had a blazing green light for companies using AI for surveillance.

So this is referring to the field of computer vision?

Primarily vision, yes, but not just facial recognition. Also gait recognition, voice recognition. Chinese companies are being given access to massive government databases that they can train their systems on, and getting large government procurement contracts to deploy them and work out the bugs. It’s not surprising that they’re ahead there.

A trial of a facial recognition software developed by China’s SenseTime, demonstrating an ID matching process. Credit: Robert McGregor via YouTube

But if you’re talking about anything other than those general purpose models or surveillance, it gets much harder [to compare]. You have to zoom in more and look at the pace of diffusion. How are we defining those different things? Are we looking at particular sectors?

To the extent that I could give an overall answer, it seems obvious that the U.S. and China are the two biggest players in AI as countries and the U.S. often has the edge, but not always. That would be my attempt at a synthesis.

One of the Biden administration’s defining policies on China has been its export controls on AI chips, motivated in large part by concern about how China might leverage its AI development for military purposes. Against that backdrop, is it realistic to expect China’s cooperation in managing AI safety risks?

So far, it seems like there’s been surprisingly little retaliation for the export controls. And it seems like the controls might have actually even contributed to the first set of track one talks that the U.S. and China had on AI safety in Geneva earlier this year. So it doesn’t seem at all obvious that the controls have damaged the prospect of cooperation. But I expect broader dynamics in the U.S.-China relationship to be one of the key factors determining whether it’s possible to collaborate on AI safety, and I do think the controls were bad for the broader dynamics.

Left: Officials from the U.S. and China hold the first U.S.-China intergovernmental dialogue on artificial intelligence in Geneva, May 14, 2024. Right: A statement released by the National Security Council spokesperson on the AI talks. Credit: MOFA, The White House

Are there specific areas related to AI where you think country-to-country dialogue is more critical?

A lot of AI safety issues don’t require that kind of international or multilateral coordination, like making sure self-driving cars don’t crash, or ensuring good results for AI in healthcare.

The kinds of issues where country-to-country dialogue is necessary include AI automation in the military, similar to how the U.S. and the Soviet Union during the Cold War had a mutual interest in preventing the accidental use of nuclear weapons and preventing misperceptions from leading to escalation. There are similar dynamics with AI, where you don’t want a poorly designed system to malfunction and thereby start some sort of crisis escalation dynamic, or leading to misperceptions about the other side’s intentions.

Another set of issues would be around the capabilities of non-state actors. This doesn’t seem to be a problem with today’s AI systems, but we’re talking about the possibility of AI helping with bio-weapon development or helping some not very capable hackers upgrade to having much more sophisticated hacking skills. If there are things that can be done to prevent that proliferation, that’s in the interest of both of these great powers.

A final example is more in the sci-fi direction, but it’s something worth taking seriously, which is losing control of advanced AI systems altogether. If you get in this race to the bottom dynamic, where you feel like you have to keep developing and deploying more advanced AI systems because your competitors are going to be doing the same, that is a really unsafe equilibrium. To the extent that that is a risk that both sides take seriously, I think they would both see it as in their interest to try and communicate and coordinate on not having mutually undesirable outcomes.

Eliot Chen is a Toronto-based staff writer at The Wire. Previously, he was a researcher at the Center for Strategic and International Studies’ Human Rights Initiative and MacroPolo. @eliotcxchen